I am a postdoctoral researcher, collaborating with Academy Prof. Guoying Zhao in University of Oulu and Prof. Jukka Leppänen in University of Turku. Prior to that, I received my Ph.D. degree from the Institute of Automation, Chinese Academy of Sciences (CASIA) and University of Chinese Academy of Sciences (UCAS), supervised by Prof. Jianhua Tao.

My research interest lies in the intersection of affective computing and deep learning, with a specific focus on multimodal learning and self-supervised learning. I have published several papers at the top international AI journals and conferences, such as Information Fusion, IEEE Trans. on Affective Computing, ACM MM, and ICASSP.

I am also the winner of several international competitions in affective computing, such as MuSe and MEGC.

Feel free to reach out if you’re interested in my work and want to explore potential collaborations.

📜 Research Area

| Computer Vision : Facial Expression Recognition; Micro-Expression Recognition; Audio-Visual Emotion Recognition; Multimodal Large Language Model |

Speech Signal Processing : Speech Emotion Recognition |

| Natural Language Processing : Multimodal Sentiment Analysis; Emotion Recognition in Conversation; Large Language Model |

Self-supervised Learning : Contrastive Learning; Masked Data Modeling |

🔥 News

- 2024.07: 🎉🎉 SVFAP is accepted by IEEE Trans. on Affective Computing.

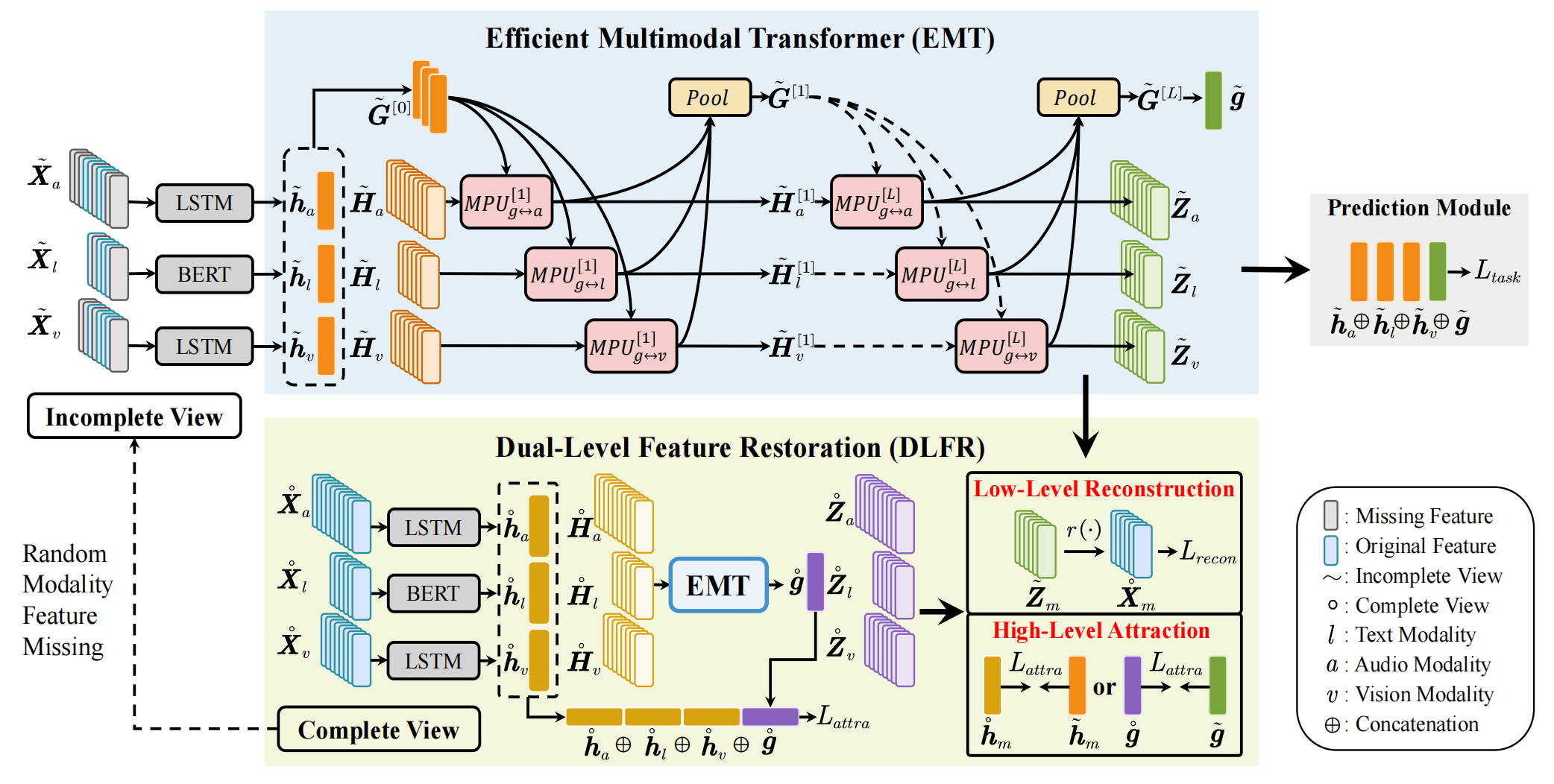

- 2024.07: 🎉🎉 EMT-DLFR is selected as an ESI Highly Cited Paper.

- 2024.03: 🎉🎉 HiCMAE and GPT-4v with Emotion are accepted by Information Fusion.

- 2023.07: 🎉🎉 MAE-DFER is accepted by ACM MM 2023.

- 2023.04: 🎉🎉 EMT-DLFR is accepted by IEEE Trans. on Affective Computing.

📝 Publications

# Equal contribution, * Corresponding author

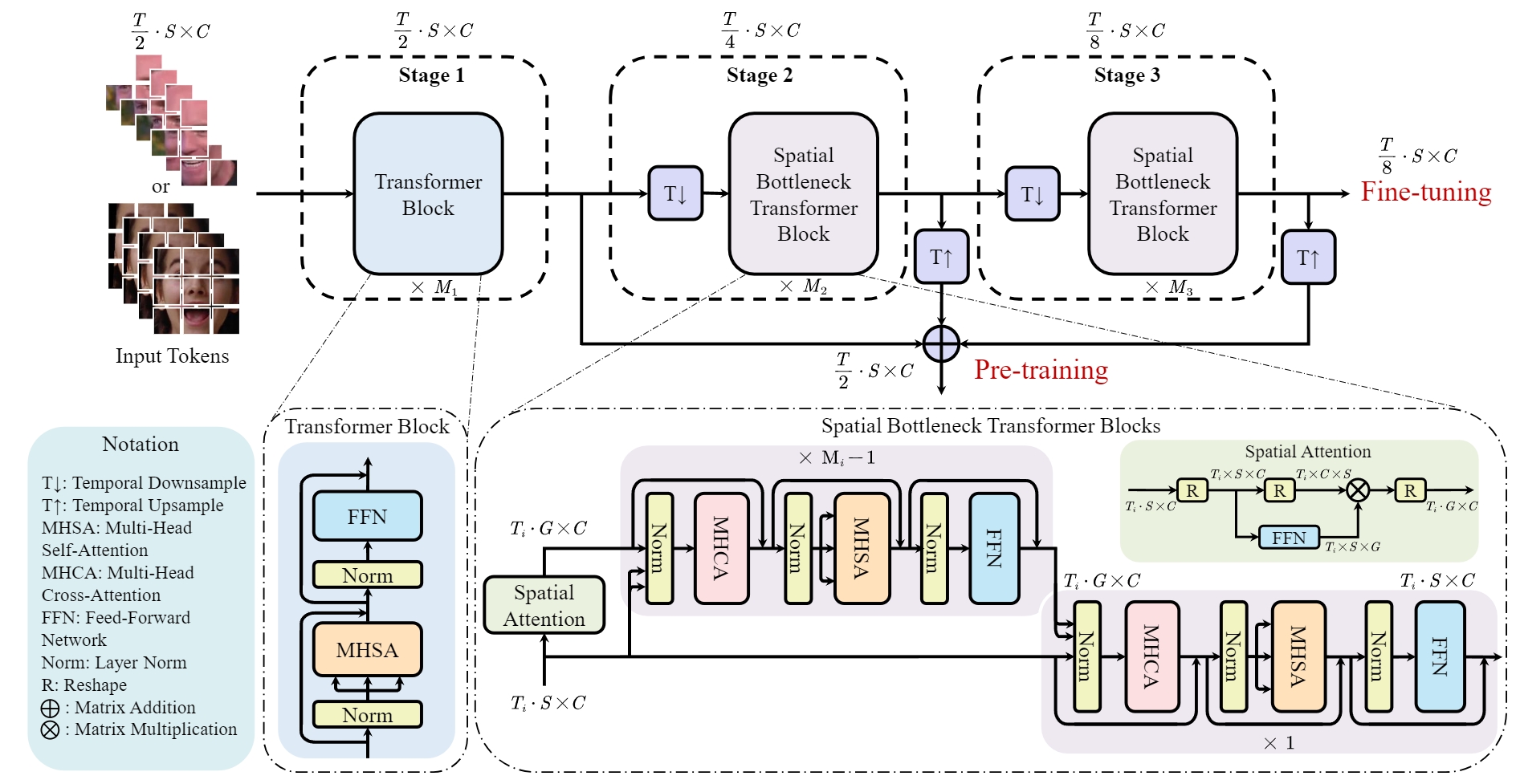

SVFAP: Self-supervised Video Facial Affect Perceiver

Licai Sun, Zheng Lian#, Kexin Wang, Yu He, Mingyu Xu, Haiyang Sun, Bin Liu#, Jianhua Tao#

IEEE Trans. on Affective Computing, 2024 |

- SVFAP seeks large-scale self-supervised pre-training for video facial affect analysis and achieves superior performance on nine datasets spanning three different tasks.

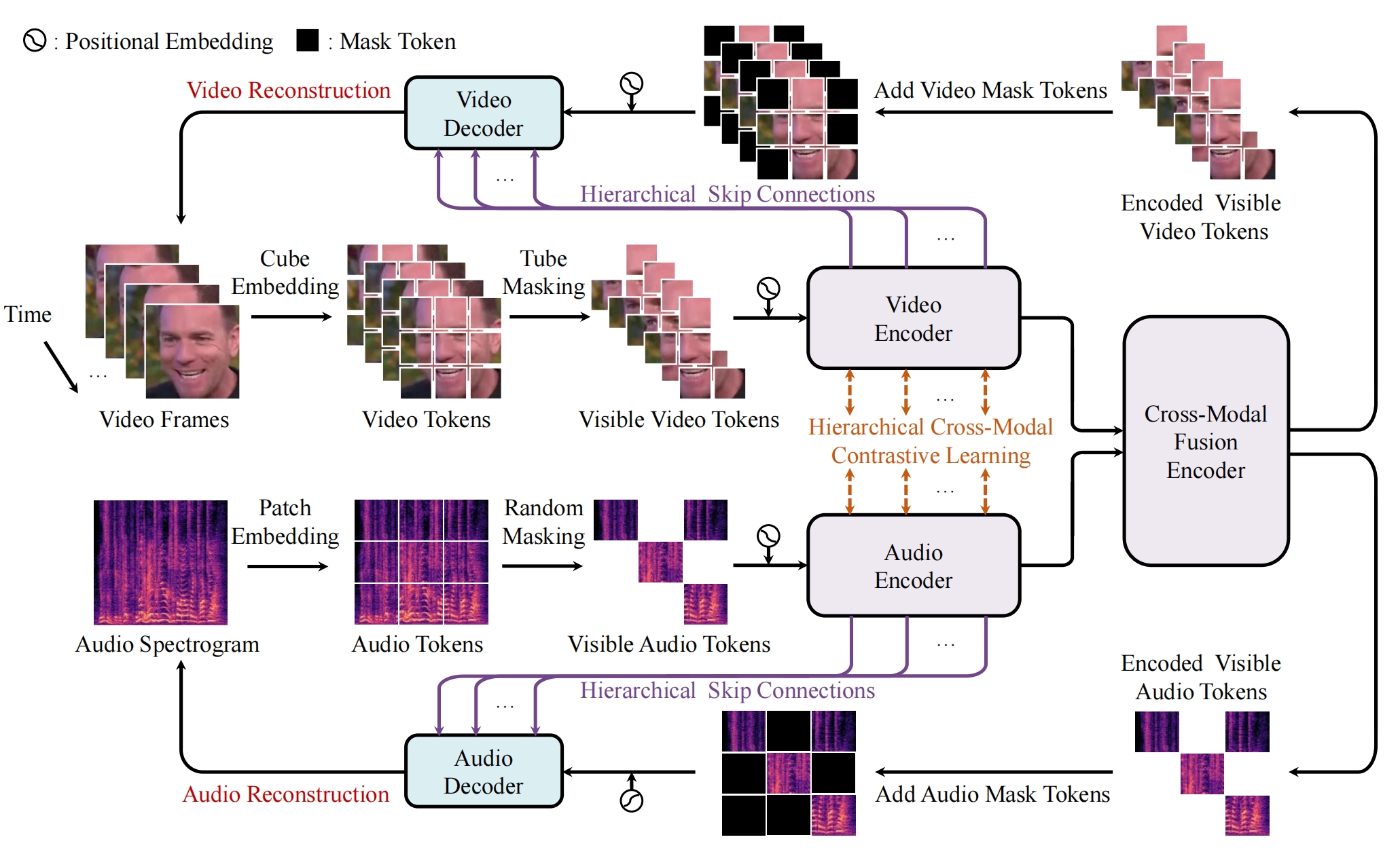

Licai Sun, Zheng Lian, Bin Liu#, Jianhua Tao#

- HiCMAE introduces a novel hierarchical contrastive masked autoencoder for self-supervised audio-visual emotion recognition (AVER) and achieves SOTA performance on nine popular AVER datasets.

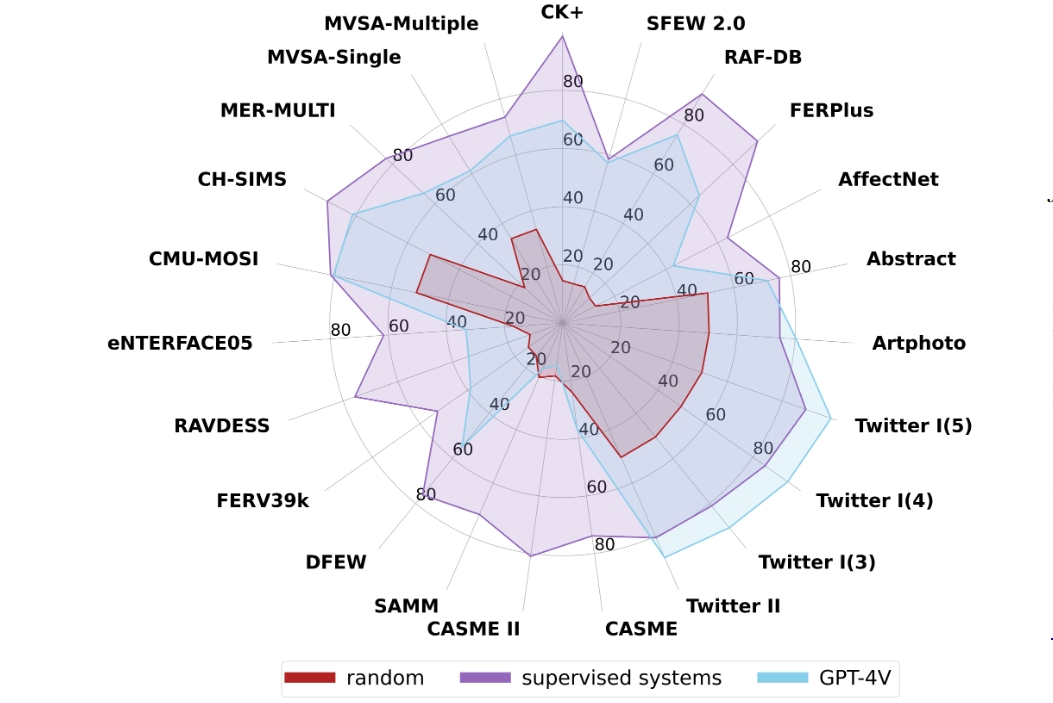

GPT-4V with Emotion: A Zero-shot Benchmark for Generalized Emotion Recognition

Zheng Lian, Licai Sun, Haiyang Sun, Kang Chen, Zhuofan Wen, Hao Gu, Bin Liu#, Jianhua Tao#

- This paper quantitatively evaluates the emotional intelligence of GPT-4V on 21 benchmark datasets covering 6 emotion recognition tasks.

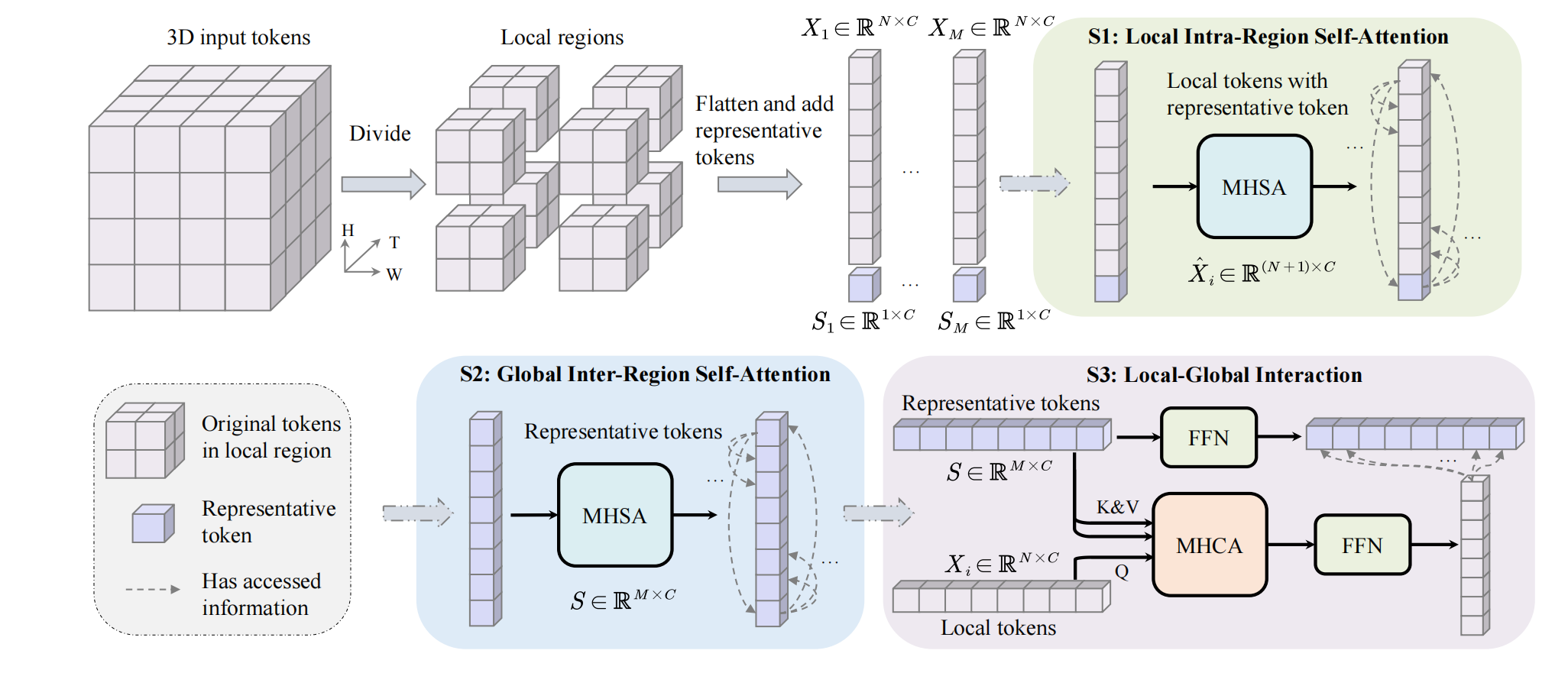

MAE-DFER: Efficient Masked Autoencoder for Self-supervised Dynamic Facial Expression Recognition

Licai Sun, Zheng Lian, Bin Liu, Jianhua Tao

- MAE-DFER presents an early attempt to leverage large-scale self-supervised pre-training for dynamic facial expression recognition (DFER) and demonstrates great success on six popular DFER datasets.

-

Explainable Multimodal Emotion Reasoning, Zheng Lian, Licai Sun, Mingyu Xu, Haiyang Sun, Ke Xu, Zhuofan Wen, Shun Chen, Bin Liu, Jianhua Tao, arXiv 2023 |

-

Mer 2023: Multi-label learning, modality robustness, and semi-supervised learning, Zheng Lian, Haiyang Sun, Licai Sun, Kang Chen, Mngyu Xu, Kexin Wang, Ke Xu, Yu He, Ying Li, Jinming Zhao, Ye Liu, Bin Liu, Jiangyan Yi, Meng Wang, Erik Cambria, Guoying Zhao, Björn W Schuller, Jianhua Tao, ACM MM 2023 |

-

GCNet: graph completion network for incomplete multimodal learning in conversation, Zheng Lian, Lan Chen, Licai Sun, Bin Liu#, Jianhua Tao#, TPAMI 2023 |

-

Multimodal Cross- and Self-Attention Network for Speech Emotion Recognition, Licai Sun, Bin Liu, Jianhua Tao, Zheng Lian, ICASSP 2021

-

Multimodal Temporal Attention in Sentiment Analysis, Yu He*, Licai Sun*, Zheng Lian, Bin Liu, Jianhua Tao, Meng Wang, Yuan Cheng, MuSe 2022

-

Multimodal emotion recognition and sentiment analysis via attention enhanced recurrent model, Licai Sun*, Mingyu Xu*, Zheng Lian, Bin Liu, Jianhua Tao, Meng Wang, Yuan Cheng, MuSe 2021

-

Multi-modal continuous dimensional emotion recognition using recurrent neural network and self-attention mechanism, Licai Sun*, Zheng Lian*, Bin Liu, Jianhua Tao, Mingyue Niu, MuSe 2020

🎖 Honors and Awards

- 2023.10: 🎉🎉 Winner of Micro-Expression and Marco-Expression Spotting Task at MEGC 2023 in ACM MM 2023.

- 2022.10: 🎉🎉 The MuSe-Stress 2022 Multimodal Sentiment Analysis Challenge Prize at MuSe 2022 in ACM MM 2022.

- 2021.10: 🎉🎉 The MuSe-Wilder 2021 Multimodal Sentiment Analysis Challenge Prize at MuSe 2021 in ACM MM 2021.

- 2021.10: 🎉🎉 The MuSe-Sent 2021 Multimodal Sentiment Analysis Challenge Prize at MuSe 2021 in ACM MM 2021.

- 2021.10: 🎉🎉 The MuSe-Physio 2021 Multimodal Sentiment Analysis Challenge Prize at MuSe 2021 in ACM MM 2021.

- 2020.10: 🎉🎉 The 2020 Multimodal Sentiment in-the-Wild Challenge Prize at MuSe 2020 in ACM MM 2020.

📖 Educations

-

2019.09 - 2024.06, Ph.D. in Computer Applied Technology, Institute of Automation, Chinese Academy of Sciences and University of Chinese Academy Sciences, Beijing, China.

-

2016.09 - 2019.06, M.Sc. in Computer Technology, University of Chinese Academy Sciences, Beijing, China.

-

2012.09 - 2016.06, B.Eng. in Electronic and Information Technology, Beijing Forestry University, Beijing, China.

💬 Professional Services

- Journal Reviewer: IEEE Trans. on Affective Computing, Speech Communication, Engineering Applications of Artificial Intelligence.

- Conference Reviewer: ACM MM (2024, 2023), ICASSP (2022), InterSpeech (2020).

- Program Committee: MER2023@ACM MM 2023 Grand Challenge and MRAC2023@ACM MM 2023 Workshop.